IllumHarmony-Dataset

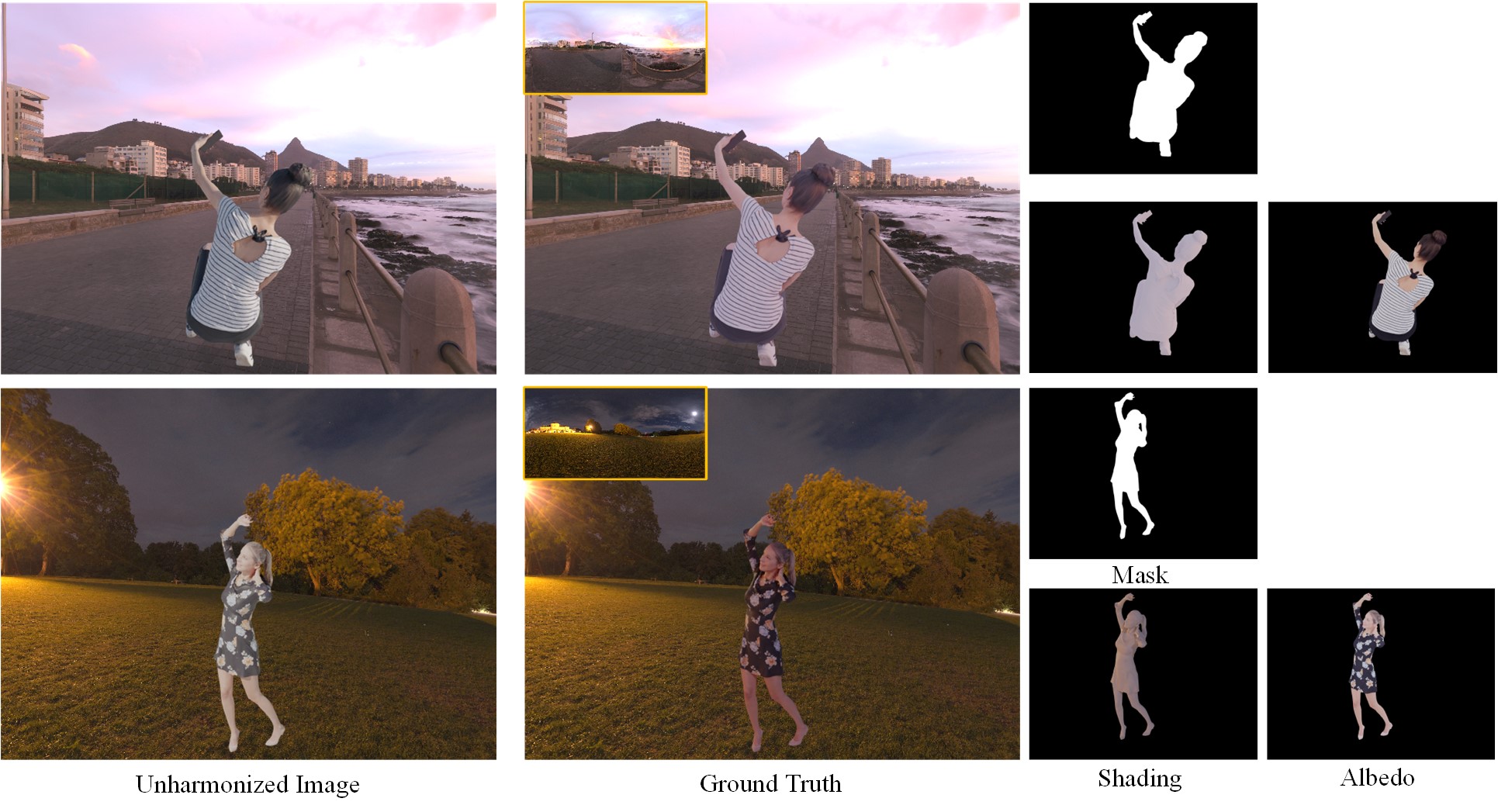

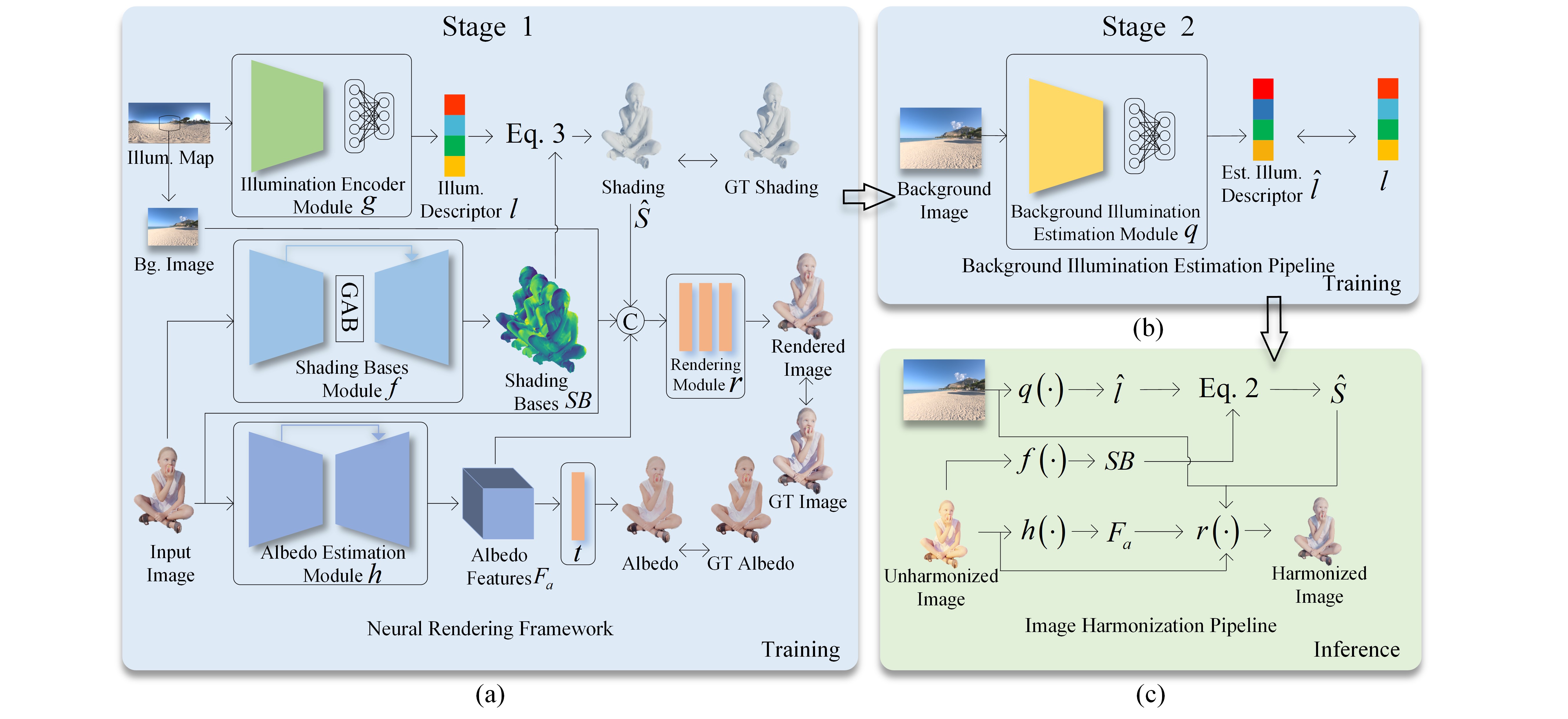

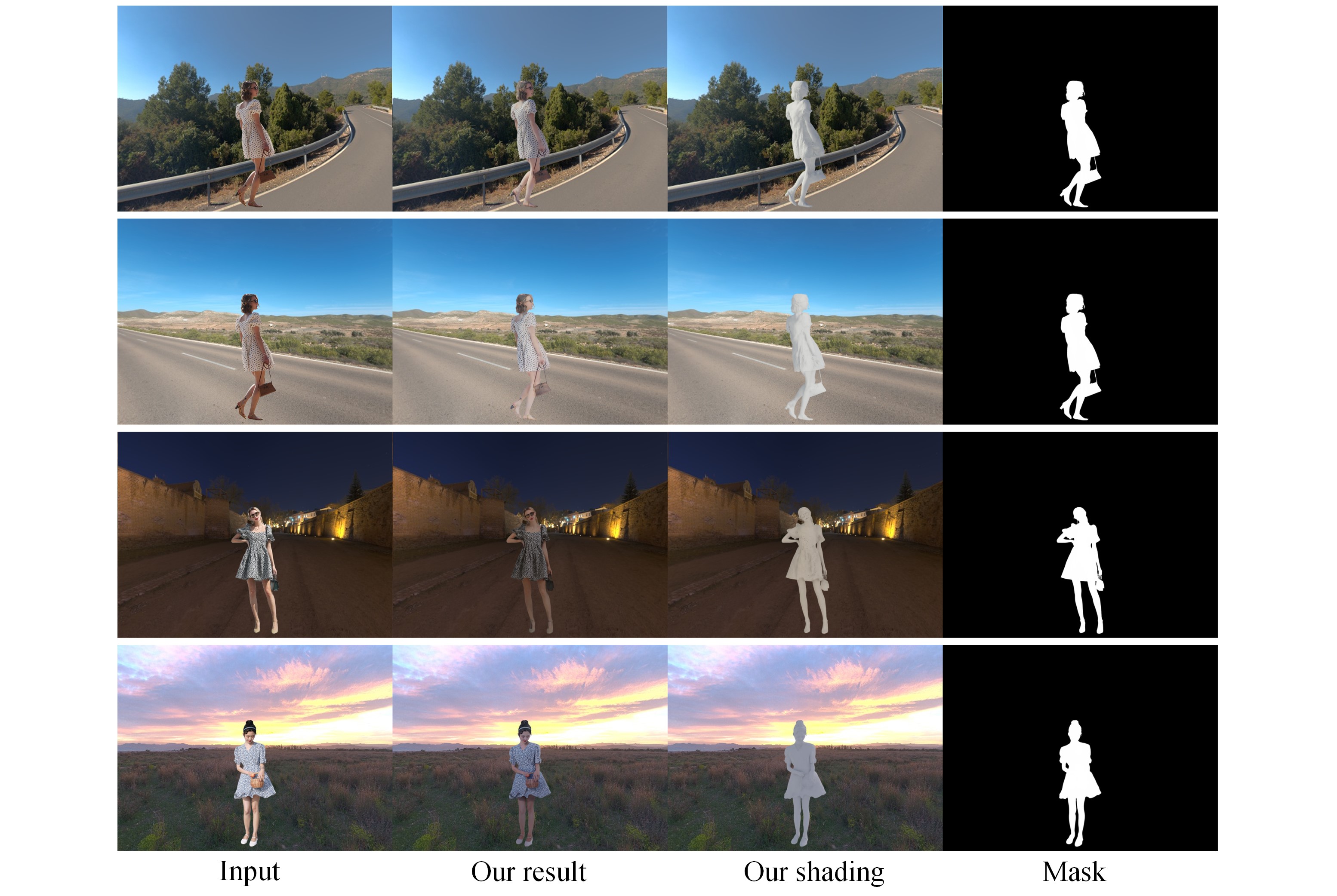

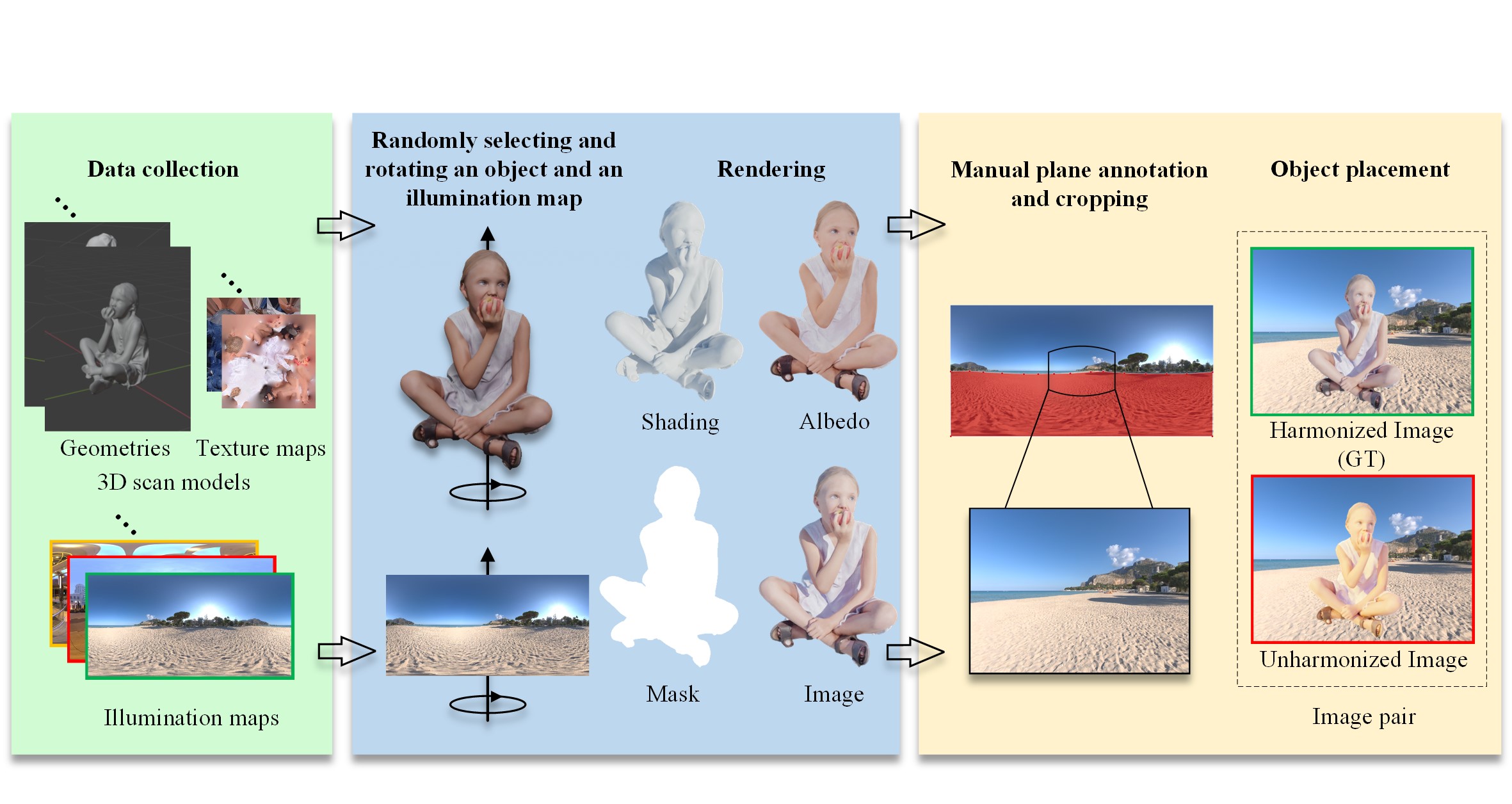

The pipeline of constructing the pair of harmonized image and unharmonized image in the IllumHarmony-Dataset. It mainly covers data collection, rendering, and object placement. To train the SIDNet, shading and albedo are also rendered.

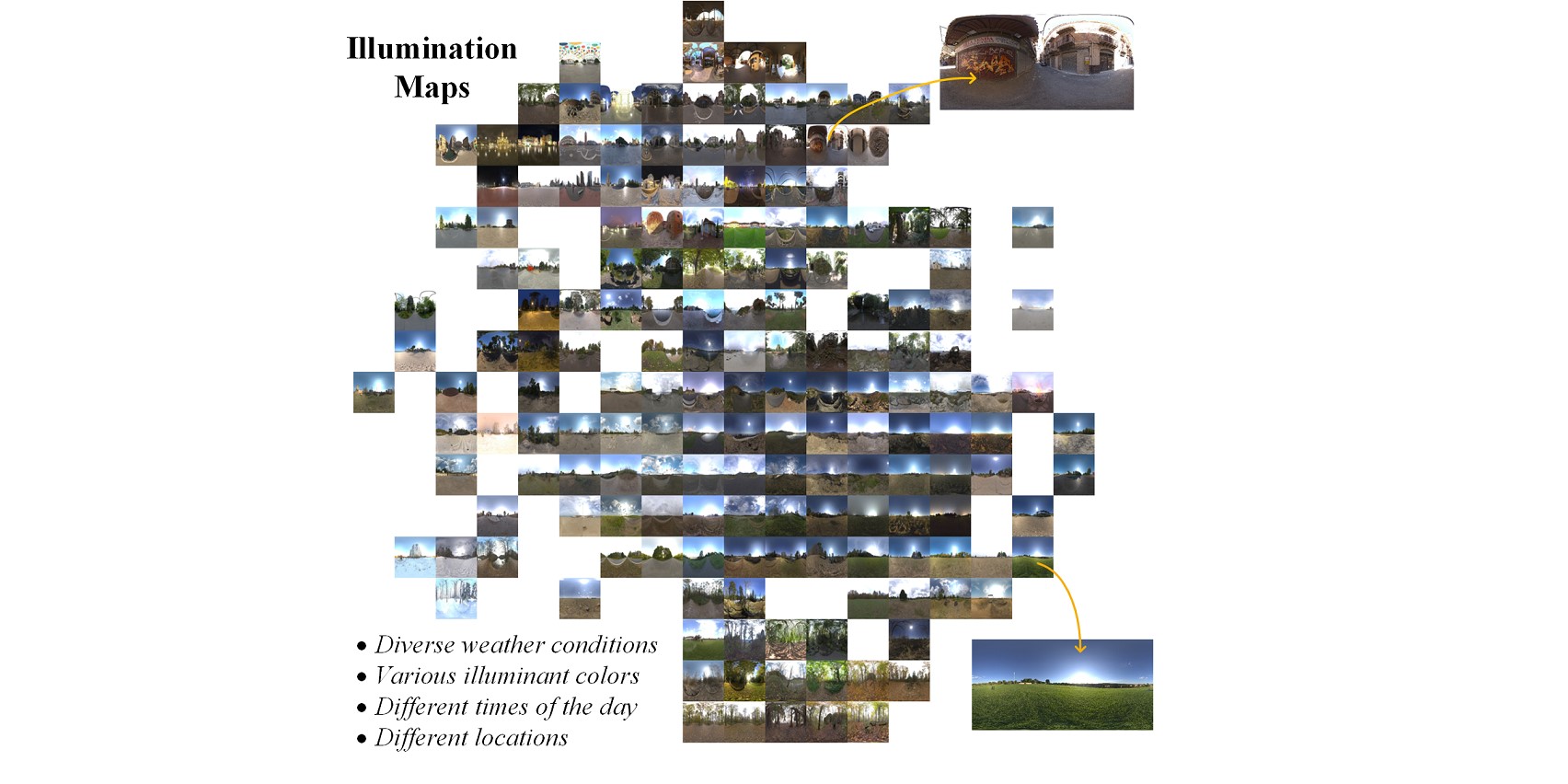

The t-SNE visualization of our collected illumination maps, which contain diverse weather conditions, illuminant colors, time of the day, and locations.

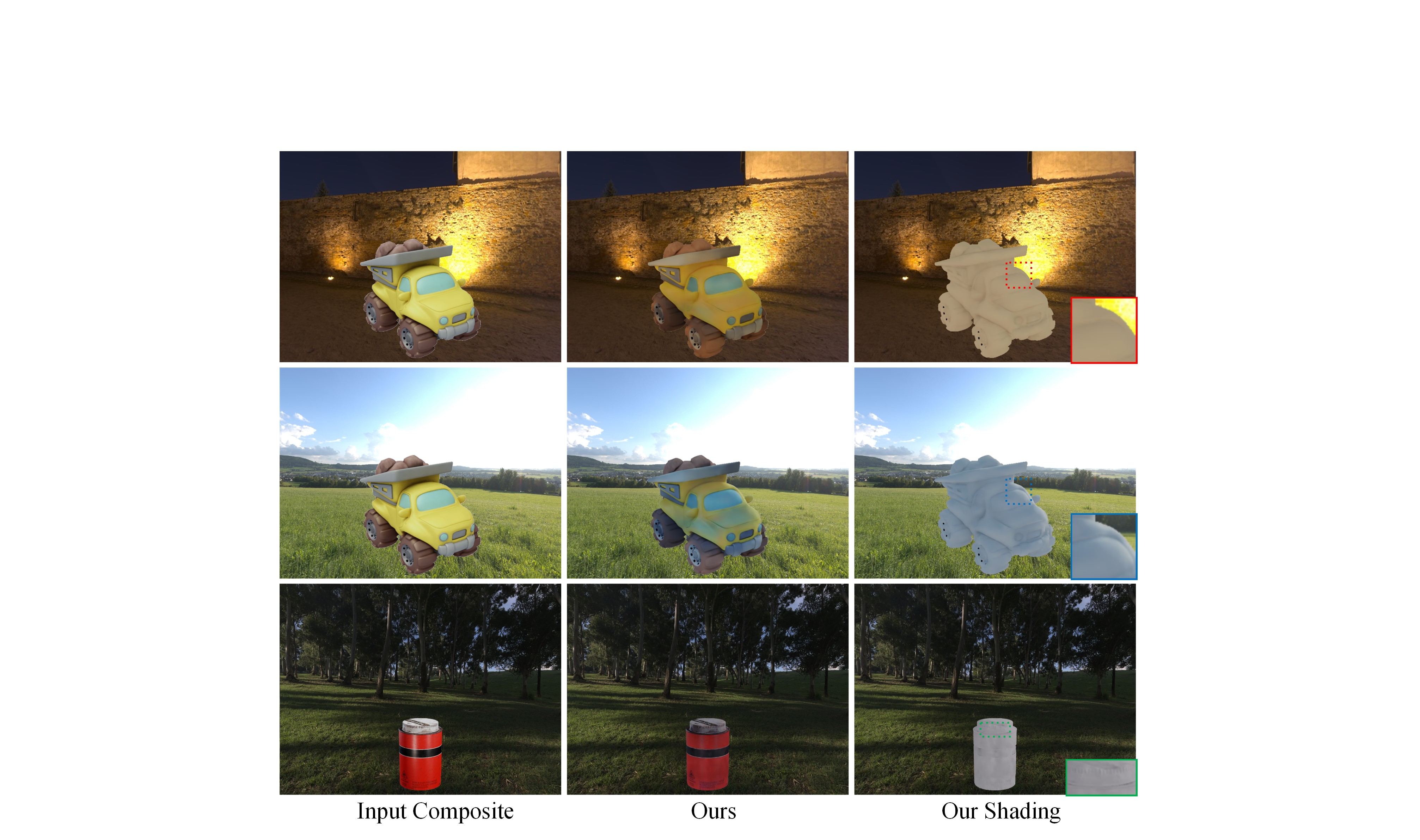

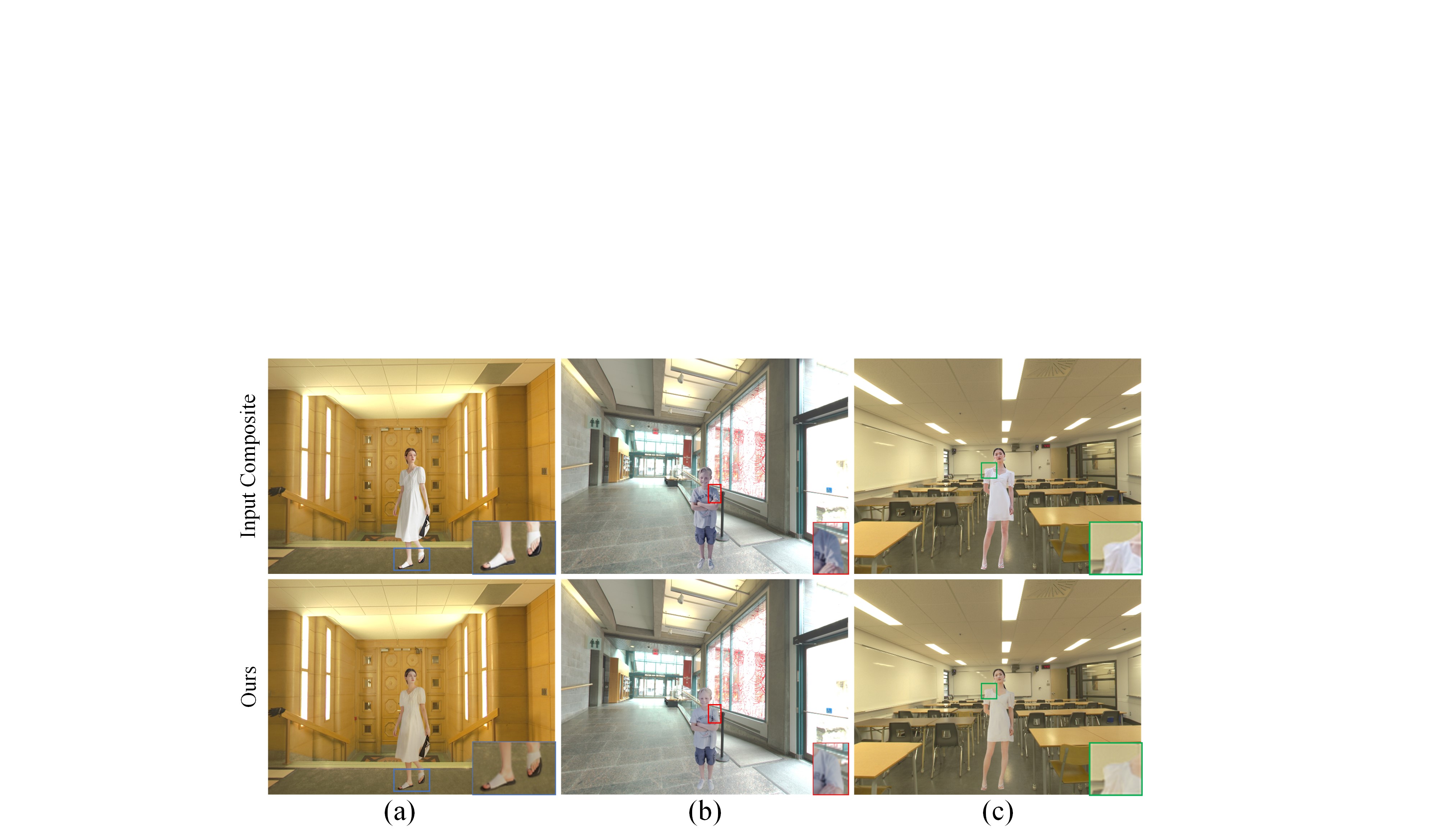

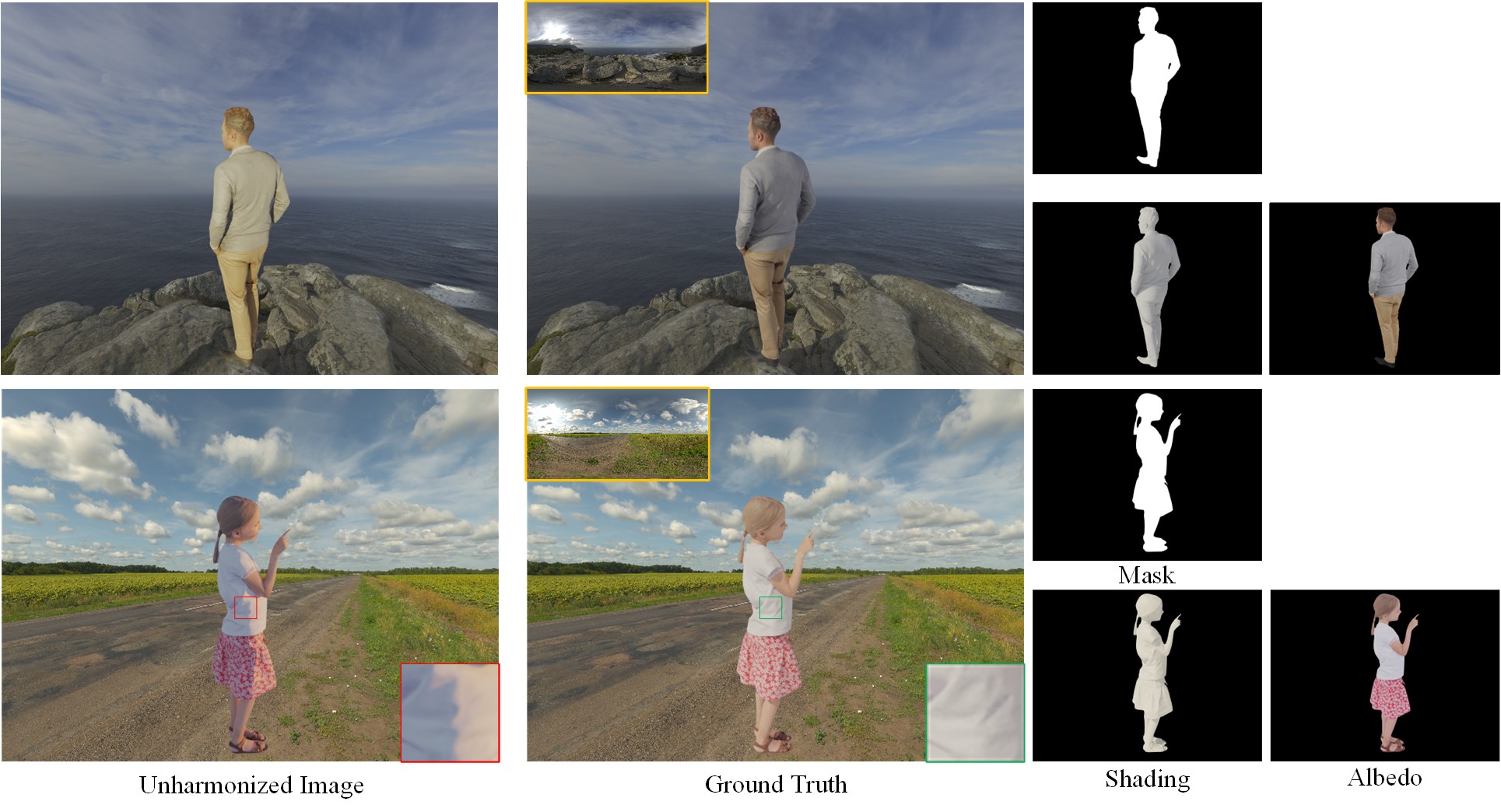

High-quality photo-realistic examples from our constructed dataset. Red and green insets in the bottom row indicate that our dataset contains challenging shading variations.